Much of my recent writing has concerned future scenarios where an artificial intelligence (AI) breakthrough leads to a situation where we humans are no longer the smartest agents on the planet in terms of general intelligence, in which case we cannot (I argue) count on automatically remaining in control; see, e.g., Chapter 4 in my book Here Be Dragons: Science, Technology and the Future of Humanity, or my paper Remarks on artificial intelligence and rational optimism. I am aware of many popular arguments for why such a breakthrough is not around the corner but can only be expected in the far future or not at all, and while I remain open to the conclusion possibly being right, I typically find the arguments themselves at most moderately convincing.1 In this blog post I will briefly consider two such arguments, and give pointers to related and important recent developments. The first such argument is one that I've considered silly for as long as I've given any thought at all to these matters; this goes back at least to my early twenties. The second argument is perhaps more interesting, and in fact one that I've mostly been taking very seriously.

1. A computer program can never do anything creative, as all it does is to blindly execute what it has been programmed to do.

This argument is hard to take seriously, because if we do, we must also accept that a human being such as myself cannot be creative, as all I can do is to blindly execute what my genes and my environment have programmed me to do (this touches on the tedious old free will debate). Or we might actually bite that bullet and accept that humans cannot be creative, but with such a restrictive view of creativity the argument no longer works, as creativity will not be needed to outsmart us in terms of general intelligence. Anyway, the recent and intellectually crowd-sourced paper The Surprising Creativity of Digital Evolution: A Collection of Anecdotes from the Evolutionary Computation and Artificial Life Research Communities offers a fascinating collection of counterexamples to the claim that computer programs cannot be creative.

2. We should distinguish between narrow AI and artificial general intelligence (AGI). Therefore, as far as a future AGI breakthrough is concerned, we should not be taken in by the current AI hype, because it is all just a bunch of narrow AI applications, irrelevant to AGI.

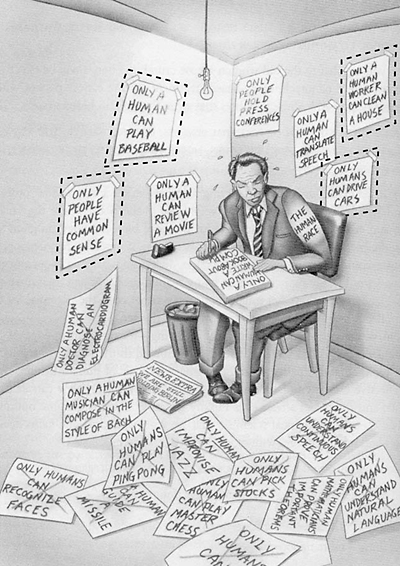

The dichotomy between narrow AI and AGI is worth emphasizing, as UC Berkeley computer scientist Michael Jordan does in his interesting recent essay Artificial Intelligence - The Revolution Hasn’t Happened Yet. That discourse offers a healthy dose of skepticism concerning the imminence of AGI. And while the claim that progress in narrow AI is not automatically a stepping stone towards AGI seems right, the oft-repeated stronger claim that no progress in narrow AI can serve as such a stepping stone seems unwarranted. Can we be sure that the poor guy in the cartoon on the right (borrowed from Ray Kurzweil's 2005 book; click here for a larger image) can carry on much longer in his desperate production of examples of what only humans can do? Do we really know that AGI will not eventually emerge from a sufficiently broad range of specialized AI capabilities? Can we really trust Thore Husfeldt's image suggesting that Machine Learning Hill is just an isolated hill rather than a slope leading up towards Mount Improbable where real AGI can be found? I must admit that my certainty about such a topography in the landscape of computer programs is somewhat eroded by recent dramatic advances in AI applications. I've previously mentioned as an example AlphaZero's extraordinary and self-taught way of playing chess, made public in December last year. Even more striking is last week's demonstration of the Google Duplex personal assistant's ability to make intelligent phone conversations. Have a look:3

Footnotes

1) See Eliezer Yudkowsky's recent There’s No Fire Alarm for Artificial General Intelligence for a very interesting comment on the lack of convincing arguments for the non-imminence of an AI breakthrough.

2) The image appears some 22:30 into the video, but I really recommend watching Thore's entire talk, which is both instructive and entertaining, and which I had the privilege of hosting in Gothenburg last year.

3) See also the accompanying video exhibiting a wider range of Google AI products. I am a bit dismayed by its evangelical tone: we are told what wonderful enhancements of our lives these products offer, and there is no mention at all of possible social downsides or risks. Of course I realize that this is the way of the commercial sector, but I also think a company of Google's unique and stupendous power has a duty to rise above that narrow-minded logic. Don't be evil, goddamnit!

Jisses, vad spännande framtiden blir! Och inte om femtio år, utan alldeles säkert redan nästa årtionde. Eller redan nu!

SvaraRaderaApropå fotnot 3: https://arstechnica.com/gadgets/2018/05/google-employees-resign-in-protest-of-googlepentagon-drone-program/

SvaraRaderaTack för info. Helt klart värt en egen bloggpost.

Radera